Authors: Frits Jensen (IBM Denmark), Grzegorz Smolko (IBM Poland).

In this article we compare performance between latest versions of four application server runtimes: IBM WAS, IBM Liberty, Red Hat JBoss EAP and Red Hat WildFly. How does performance relate to your environment and to your choice of application servers you may ask yourself? Besides the obvious advantage of serving requests quicker and faster, a higher performing application server platform will serve more requests and for more users using fewer processes and less hardware, which means it can reduce the Total Cost of Ownership (TCO) of your middleware infrastructure. TCO is the total cost of software licenses and support on operating systems and application servers, and provisioning, management, maintenance, monitoring efforts, electrical power and real estate, etc. Costs are increasing when more processing power is needed and thus more servers are added. Some customers deploy their applications on thousands of application servers running in cloud environments and in that scale performance matters even more.

Various benchmarks were created to allow customers to be able to compare application servers. One of the most recognized is SPECJEnterprise. Since 2004 IBM and Oracle (BEA) have continuously been publishing performance data over the years, comparing WebSphere and WebLogic products by measuring the full-system performance for the application servers, databases and supporting infrastructure. IBM recently published the latest results from comparing the latest WAS and WebLogic editions. The results are ranging from 32% to 76% in favor of WAS.

Red Hat on the other hand, has never participated in the SPECJEnterprise comparisons with their JBoss EAP & WildFly application server products. Therefore, in order to be able to compare performance between IBM’s WebSphere Application Server and Liberty up against Red Hat’s JBoss EAP and WildFly products, we have been doing these tests ourselves from time to time.

For the performance testing we have used the Apache DayTrader application. This fully fledged Java EE application comes in two versions, for Java EE 6 or EE 7. The DayTrader 3 application is using a variety of Java EE 6 API’s such as Servlet 3.0, JSP 2.2, JSF 2.0, JPA 2.0, EJB 3.1, JDBC 4.0, JMS 1.1, Message-Driven Beans, JAX-RS 1.1. The DayTrader 7 application uses same updated APIs from the Java EE 7. The application is designed to have no bottlenecks. The application source code for DayTrader v3 is publicly available, so everyone may use it in their own environment to perform similar tests (v7 will be available soon). DayTrader simulates an online stock trading application. In the real world your applications may not have been constructed this optimally and may have bottlenecks . In that case the performance difference between the compared server would probably be smaller, however in order to be able to compare the efficiency of different Application Server implementations, we must use an application that itself is as optimized as it can be. On the other hand it must be using all of the major enterprise quality level sets of API’s from Java EE, so that the performance test can effectively exercise and measure the achievable performance on the core components of the application server.

At the time of this writing Full WAS Profile 8.5.5.5 and JBoss EAP 6.4 do not support Java EE 7 and thus could not run DayTrader 7 test application. Therefore we used DayTrader 3 (Java EE 6 based) to test WAS and JBoss EAP.

As you can see on the diagram above, the Apache DayTrader Java EE application can be configured to use plain vanilla JDBC or JPA database access, as well as enabling or disabling JMS messaging asynchronous interaction in order to be able to compare the different ways of accessing data. You can read more about the DayTrader application in this article on IBM developerWorks: “Measuring performance with the DayTrader 3 benchmark sample”.

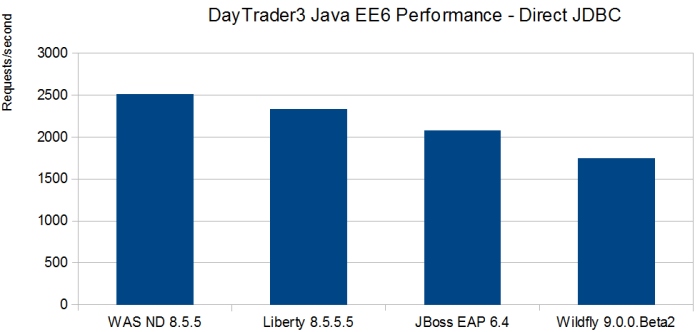

Comments on the results for Direct JDBC

For direct JDBC connectivity, WildFly 9.0.0 beta2 was the slowest performer. JBoss EAP is 19% faster than WildFly. Liberty is 33% faster and WAS is a whopping 43% faster.

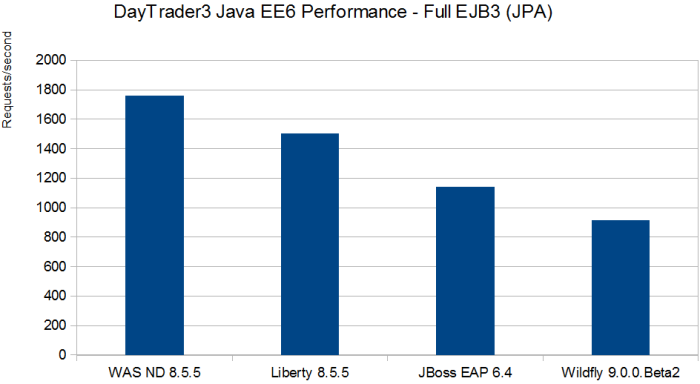

Comments on the results for Full EJB (JPA)

For JPA mode, again WildFly 9.0.0 beta2 was the slowest performer. JBoss EAP is 25% faster than WildFly. Liberty is 65% faster and WAS being 93% faster is almost twice as fast.

Testing methodology

Application servers installed out of the box are not typically prepared for performance testing and must be tuned. We performed basic tuning, based on publicly available guides from both vendors (here is an IBM WebSphere Performance Cookbook). We experimented with the individual Application Server settings in order to get the highest throughput achievable. Our aim was to be able to drive the CPU load all up on the application server machine, while having not too high load on the backend database server machine in order to ensure that we do not have a bottleneck there. The test client machine and the database server therefore has generous processing hardware power available and the Trader database has been performance optimized following best practice in order to remove any bottlenecks at that level as good as can be done.

For the Application Server configurations we changed important parameters on the tested servers: JVM Memory settings, thread pool settings, connection pool settings and cached sql statement settings. So for memory available to the server process, like the minimum and the maximum value that each server seem to like, so that the server has generous memory and is not spending time doing garbage collection too much. The thread pool maximum threads parameter (80) so that the server can service the concurrent clients. The datasource maximum connection pool parameters so that there always is ready connections for the database ready, plus the cached SQL statement for the connections, so that repetitive calls to the same statement will result in no repetitive dynamic SQL statement compilations. For the JPA cache, we made sure we deselected the caching on all the tested servers (the default for all tested servers).

Having done that, we ran the testing scripts many times against each server, experimenting with small changes of the before mentioned settings. Purpose of that was to make sure that the described settings, where optimal for the specific server being tested. We wanted to make sure that a selected set of settings was not favoring a particular server. In fact we spent no extra tuning effort on WAS and Liberty products and most of our time spent was trying to make Wildfly and JBoss EAP go faster, as fast as they could.

We used the same Oracle JVM for JBoss EAP, WildFly and for the Liberty server. For the WAS we used IBM’s J9 JVM as that is required by the product.

Tested configuration

Server configuration files can be found here.

We used 3 tiers implemented on 3 different machines, a test machine, an application server machine and a database server machine. On the test machine the test client is running using the JMeter Test client. The client is configured with multiple parallel clients (we used 40). The application server machine runs JBoss Enterprise Application Platform and WebSphere Application Server, WebSphere Liberty and WildFly application servers. The database machine runs the DB2 Database server.

On all machines the operating system is Windows Server 2008R2. Test client on the Test machine is JMeter version 2.12 running on Oracle 64bit JDK8 update 40. The Application servers on app server machine are:

- JBoss EAP 6.4 and WildFly 8.2 and 9.0.0 beta2 running on Oracle 64bit JDK8 update 40

- WAS ND 8.5.5.5 running on IBM 64 bit JDK7 v7.0.8.10

- Liberty Profile 8.5.5.5 running on Oracle 64bit JDK8 update 40.

- Database Server on Database Machine is DB2 Server EE edition version 10.5 fix 5.

For more information about WebSphere performance and additional competitive benchmarks comparing WAS, JBoss, Tomcat, etc., please take a look at this presentation on SlideShare (please note that the performance benchmarks shown in the presentation below were done by a different team in late 2014 and early 2015 and are similar, but not the same as presented in this article):

Cloud and virtualization

For virtualized IaaS cloud based infrastructure based systems, Docker is quickly becoming the standard. Docker can be described as a thin lightweight Linux-based container technology that provides an efficient virtualization function with minimal overhead. This combines parts of the functionality you can get with VMware Workstation (runnable and shippable software container) and then some Hypervisor functionality, like storing and running multiple images virtualized. By using a prebuilt Docker image (use an image that is already available from Docker Hub or create one yourself) you can use Docker container topologies for the Application Server runtime. This enables quick provisioning of an environment for any purpose, be it development, test or production. But performance remains ever important so let’s see the results of a performance throughput comparison between Liberty and WildFly. In order to compare the resulting system performance using the Docker topology, we created the relevant Docker images with the DayTrader Java EE 7 application deployed and compared the achievable throughput for Liberty on Docker versus WildFly on Docker. For more information on Liberty on Docker and download of the Liberty Docker image see this page.

Categories: Technology

Performance testing IBM MQ in a Docker environment

Performance testing IBM MQ in a Docker environment  What is the future of Apache ActiveMQ?

What is the future of Apache ActiveMQ?  RabbitMQ lacks transaction capability

RabbitMQ lacks transaction capability  WebSphere Liberty autoscale clustering with Docker

WebSphere Liberty autoscale clustering with Docker

I have a couple questions WRT use of docker for the tests. First, how was the WildFly docker image created? Was it create from scratch by IBMers or was the JBoss community image extended? https://github.com/jboss-dockerfiles/wildfly

In a related question, was the Liberty docker image on GitHub used as the base for the docker image built?https://registry.hub.docker.com/_/websphere-liberty/

If the docker files were built from the above sources then it should be noted each image was running a different host OS at the base and a different JDK. JBoss using CentOS + OpenJDK. IBM using ubuntu + IBM JDK. The article doesn’t get into these relevant details.

Also, it appears that the tests were run on a Windows 2008 server. Why would you run docker on that OS instead of a purpose built cloud and container OS like Red Hat Atomic? Weren’t the IBMers running the docker images forced to use extra utilities like VirtualBox and Boot2Docker? CertainlyVirtualBox is not known for being highly performant. The article does not contain the details need to re-create the test as run by IBMers.

LikeLike

If should be noted that I erroneously used the brand name JBoss in my post when I should have used the actual community project name WildFly. Important for this to be clear.

LikeLike

The docker tests were done by a different team and used a different hardware / software setup. I will ask authors to update the article with details on the Docker configuration.

LikeLike

You should open source the complete test methodology, setup, etc.., as defined in the IBM terms and conditions for benchmarks. Why is IBM not doing themselves what they demand of others who want to run benchmarks?

LikeLike

Rich, the methodology of the test, DayTrader source code and setup are described in this article. I do not understand your comment. Have you read the entire article? What *exactly* is missing in your opinion?

LikeLike

Hi Roman. I sure did read this “article” and honestly I would not be able to re-create the test based on the contents of this article. Perhaps you are too close to the subject and don’t see the open holes like the database setup, JDBC configuration, and application platform tuning parameters. And that is just a short list. But honestly I know pointing this out won’t make a difference. I have never seen IBM run a competitive benchmark using the same rigor and demands that appear in IBM license agreement L-JTHS-94HMLU. Seems to me that IBM talks the talk but does not walk the walk.

LikeLike

I have posted several performance comparisons – including this one – http://whywebsphere.com/2015/03/12/ibm-mq-vs-apache-activemq-performance-comparison-update/ that meet and exceed IBM own requirements (license agreement L-JTHS-94HMLU) for benchmarks you mention in your comment. So yeah, we do walk the walk :-).

LikeLike

Let me put this a different way. For legal reasons, let me keep this hypothetical. Lets say I could browse through unpublished benchmark data I have on Red Hat JBoss Middleware outperforming a variety of IBM middleware products. Then lets say that I decided to publish that data using the same level of rigor you used in this article and other articles/presentations mentioned. Would you go to IBM management and say that what I published should not be demanded by IBM to be retracted because it does not appear to meet the rigor in IBM license terms for benchmarking? Key justification of course being that any such publication would meet or exceed the standard of detail you have used.

LikeLike

> Would you go to IBM management and say that what I published should not be demanded by IBM to be retracted because it does not appear to meet the rigor in IBM license terms for benchmarking?

I am not a lawyer and can’t answer on behalf of IBM. I think this post describes most of the details (except for specific tuning settings). I will talk to the authors and suggest that they publish configuration files. I have personally done several benchmarks (such as MQ vs AMQ and few others) and I published source code, installation instructions and all of the configuration files.

LikeLike

The authors have posted server configuration and updated the article with the link to download it.

LikeLike